Author: Jason Brownlee

Weight initialization is an important design choice when developing deep learning neural network models.

Historically, weight initialization involved using small random numbers, although over the last decade, more specific heuristics have been developed that use information, such as the type of activation function that is being used and the number of inputs to the node.

These more tailored heuristics can result in more effective training of neural network models using the stochastic gradient descent optimization algorithm.

In this tutorial, you will discover how to implement weight initialization techniques for deep learning neural networks.

After completing this tutorial, you will know:

- Weight initialization is used to define the initial values for the parameters in neural network models prior to training the models on a dataset.

- How to implement the xavier and normalized xavier weight initialization heuristics used for nodes that use the Sigmoid or Tanh activation functions.

- How to implement the he weight initialization heuristic used for nodes that use the ReLU activation function.

Let’s get started.

Weight Initialization for Deep Learning Neural Networks

Photo by Andres Alvarado, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Weight Initialization for Neural Networks

- Weight Initialization for Sigmoid and Tanh

- Xavier Weight Initialization

- Normalized Xavier Weight Initialization

- Weight Initialization for ReLU

- He Weight Initialization

Weight Initialization for Neural Networks

Weight initialization is an important consideration in the design of a neural network model.

The nodes in neural networks are composed of parameters referred to as weights used to calculate a weighted sum of the inputs.

Neural network models are fit using an optimization algorithm called stochastic gradient descent that incrementally changes the network weights to minimize a loss function, hopefully resulting in a set of weights for the mode that is capable of making useful predictions.

This optimization algorithm requires a starting point in the space of possible weight values from which to begin the optimization process. Weight initialization is a procedure to set the weights of a neural network to small random values that define the starting point for the optimization (learning or training) of the neural network model.

… training deep models is a sufficiently difficult task that most algorithms are strongly affected by the choice of initialization. The initial point can determine whether the algorithm converges at all, with some initial points being so unstable that the algorithm encounters numerical difficulties and fails altogether.

— Page 301, Deep Learning, 2016.

Each time, a neural network is initialized with a different set of weights, resulting in a different starting point for the optimization process, and potentially resulting in a different final set of weights with different performance characteristics.

For more on the expectation of different results each time the same algorithm is trained on the same dataset, see the tutorial:

We cannot initialize all weights to the value 0.0 as the optimization algorithm results in some asymmetry in the error gradient to begin searching effectively.

For more on why we initialize neural networks with random weights, see the tutorial:

Historically, weight initialization follows simple heuristics, such as:

- Small random values in the range [-0.3, 0.3]

- Small random values in the range [0, 1]

- Small random values in the range [-1, 1]

These heuristics continue to work well in general.

We almost always initialize all the weights in the model to values drawn randomly from a Gaussian or uniform distribution. The choice of Gaussian or uniform distribution does not seem to matter very much, but has not been exhaustively studied. The scale of the initial distribution, however, does have a large effect on both the outcome of the optimization procedure and on the ability of the network to generalize.

— Page 302, Deep Learning, 2016.

Nevertheless, more tailored approaches have been developed over the last decade that have become the defacto standard given they may result in a slightly more effective optimization (model training) process.

These modern weight initialization techniques are divided based on the type of activation function used in the nodes that are being initialized, such as “Sigmoid and Tanh” and “ReLU.”

Next, let’s take a closer look at these modern weight initialization heuristics for nodes with Sigmoid and Tanh activation functions.

Weight Initialization for Sigmoid and Tanh

The current standard approach for initialization of the weights of neural network layers and nodes that use the Sigmoid or TanH activation function is called “glorot” or “xavier” initialization.

It is named for Xavier Glorot, currently a research scientist at Google DeepMind, and was described in the 2010 paper by Xavier and Yoshua Bengio titled “Understanding The Difficulty Of Training Deep Feedforward Neural Networks.”

There are two versions of this weight initialization method, which we will refer to as “xavier” and “normalized xavier.”

Glorot and Bengio proposed to adopt a properly scaled uniform distribution for initialization. This is called “Xavier” initialization […] Its derivation is based on the assumption that the activations are linear. This assumption is invalid for ReLU and PReLU.

— Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, 2015.

Both approaches were derived assuming that the activation function is linear, nevertheless, they have become the standard for nonlinear activation functions like Sigmoid and Tanh, but not ReLU.

Let’s take a closer look at each in turn.

Xavier Weight Initialization

The xavier initialization method is calculated as a random number with a uniform probability distribution (U) between the range -(1/sqrt(n)) and 1/sqrt(n), where n is the number of inputs to the node.

- weight = U [-(1/sqrt(n)), 1/sqrt(n)]

We can implement this directly in Python.

The example below assumes 10 inputs to a node, then calculates the lower and upper bounds of the range and calculates 1,000 initial weight values that could be used for the nodes in a layer or a network that uses the sigmoid or tanh activation function.

After calculating the weights, the lower and upper bounds are printed as are the min, max, mean, and standard deviation of the generated weights.

The complete example is listed below.

# example of the xavier weight initialization from math import sqrt from numpy import mean from numpy.random import rand # number of nodes in the previous layer n = 10 # calculate the range for the weights lower, upper = -(1.0 / sqrt(n)), (1.0 / sqrt(n)) # generate random numbers numbers = rand(1000) # scale to the desired range scaled = lower + numbers * (upper - lower) # summarize print(lower, upper) print(scaled.min(), scaled.max()) print(scaled.mean(), scaled.std())

Running the example generates the weights and prints the summary statistics.

We can see that the bounds of the weight values are about -0.316 and 0.316. These bounds would become wider with fewer inputs and more narrow with more inputs.

We can see that the generated weights respect these bounds and that the mean weight value is close to zero with the standard deviation close to 0.17.

-0.31622776601683794 0.31622776601683794 -0.3157663248679193 0.3160839282916222 0.006806069733149146 0.17777128902976705

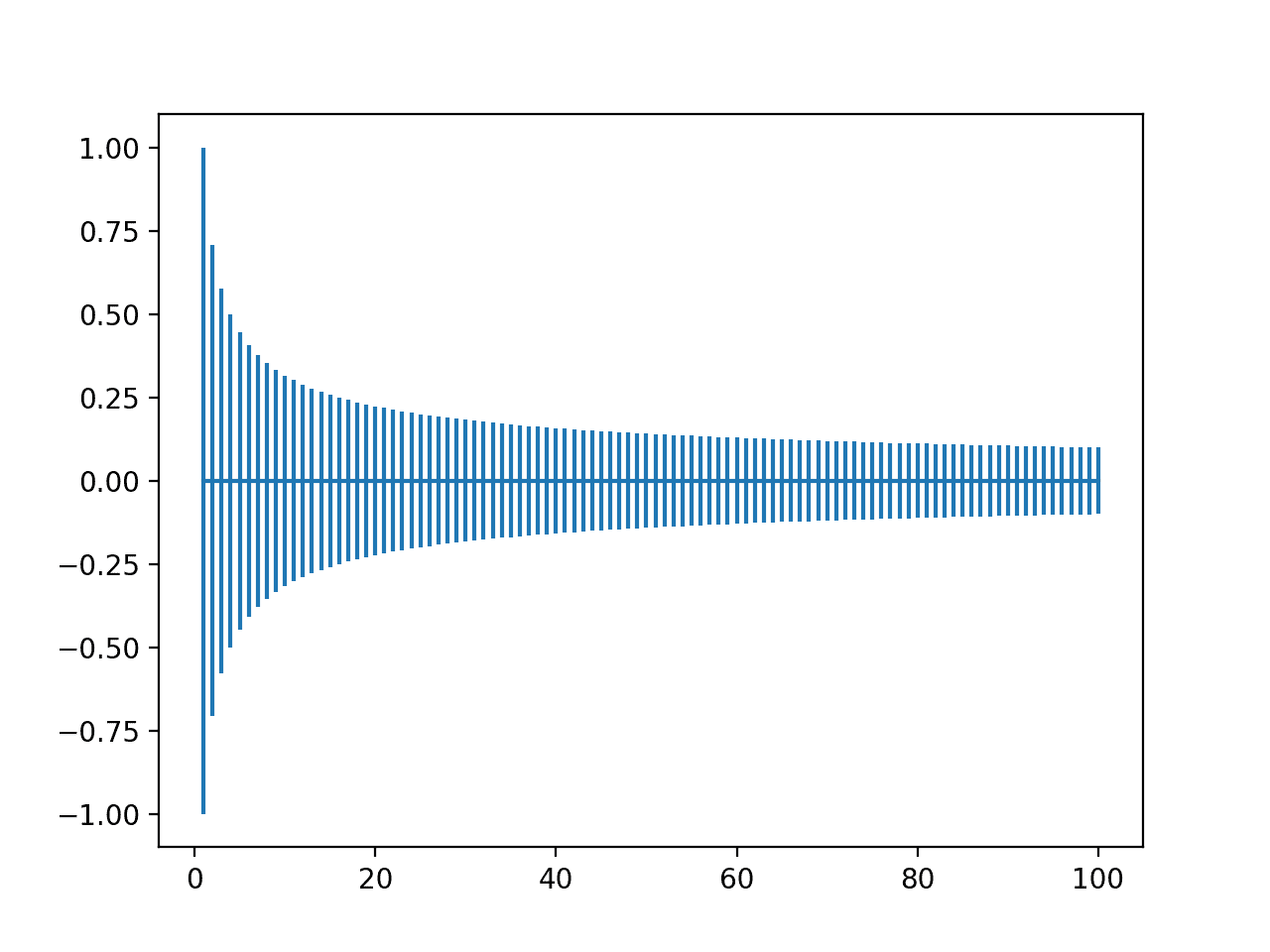

It can also help to see how the spread of the weights changes with the number of inputs.

For this, we can calculate the bounds on the weight initialization with different numbers of inputs from 1 to 100 and plot the result.

The complete example is listed below.

# plot of the bounds on xavier weight initialization for different numbers of inputs from math import sqrt from matplotlib import pyplot # define the number of inputs from 1 to 100 values = [i for i in range(1, 101)] # calculate the range for each number of inputs results = [1.0 / sqrt(n) for n in values] # create an error bar plot centered on 0 for each number of inputs pyplot.errorbar(values, [0.0 for _ in values], yerr=results) pyplot.show()

Running the example creates a plot that allows us to compare the range of weights with different numbers of input values.

We can see that with very few inputs, the range is large, such as between -1 and 1 or -0.7 to -7. We can then see that our range rapidly drops to about 20 weights to near -0.1 and 0.1, where it remains reasonably constant.

Plot of Range of Xavier Weight Initialization With Inputs From One to One Hundred

Normalized Xavier Weight Initialization

The normalized xavier initialization method is calculated as a random number with a uniform probability distribution (U) between the range -(sqrt(6)/sqrt(n + n)) and sqrt(6)/sqrt(n + n), where n us the number of inputs to the node (e.g. number of nodes in the previous layer) and m is the number of outputs from the layer (e.g. number of nodes in the current layer).

- weight = U [-(sqrt(6)/sqrt(n + n)), sqrt(6)/sqrt(n + n)]

We can implement this directly in Python as we did in the previous section and summarize the statistical summary of 1,000 generated weights.

The complete example is listed below.

# example of the normalized xavier weight initialization from math import sqrt from numpy import mean from numpy.random import rand # number of nodes in the previous layer n = 10 # number of nodes in the next layer m = 20 # calculate the range for the weights lower, upper = -(sqrt(6.0) / sqrt(n + m)), (sqrt(6.0) / sqrt(n + m)) # generate random numbers numbers = rand(1000) # scale to the desired range scaled = lower + numbers * (upper - lower) # summarize print(lower, upper) print(scaled.min(), scaled.max()) print(scaled.mean(), scaled.std())

Running the example generates the weights and prints the summary statistics.

We can see that the bounds of the weight values are about -0.447 and 0.447. These bounds would become wider with fewer inputs and more narrow with more inputs.

We can see that the generated weights respect these bounds and that the mean weight value is close to zero with the standard deviation close to 0.17.

-0.44721359549995787 0.44721359549995787 -0.4447861894315135 0.4463641245392874 -0.01135636099916006 0.2581340352889168

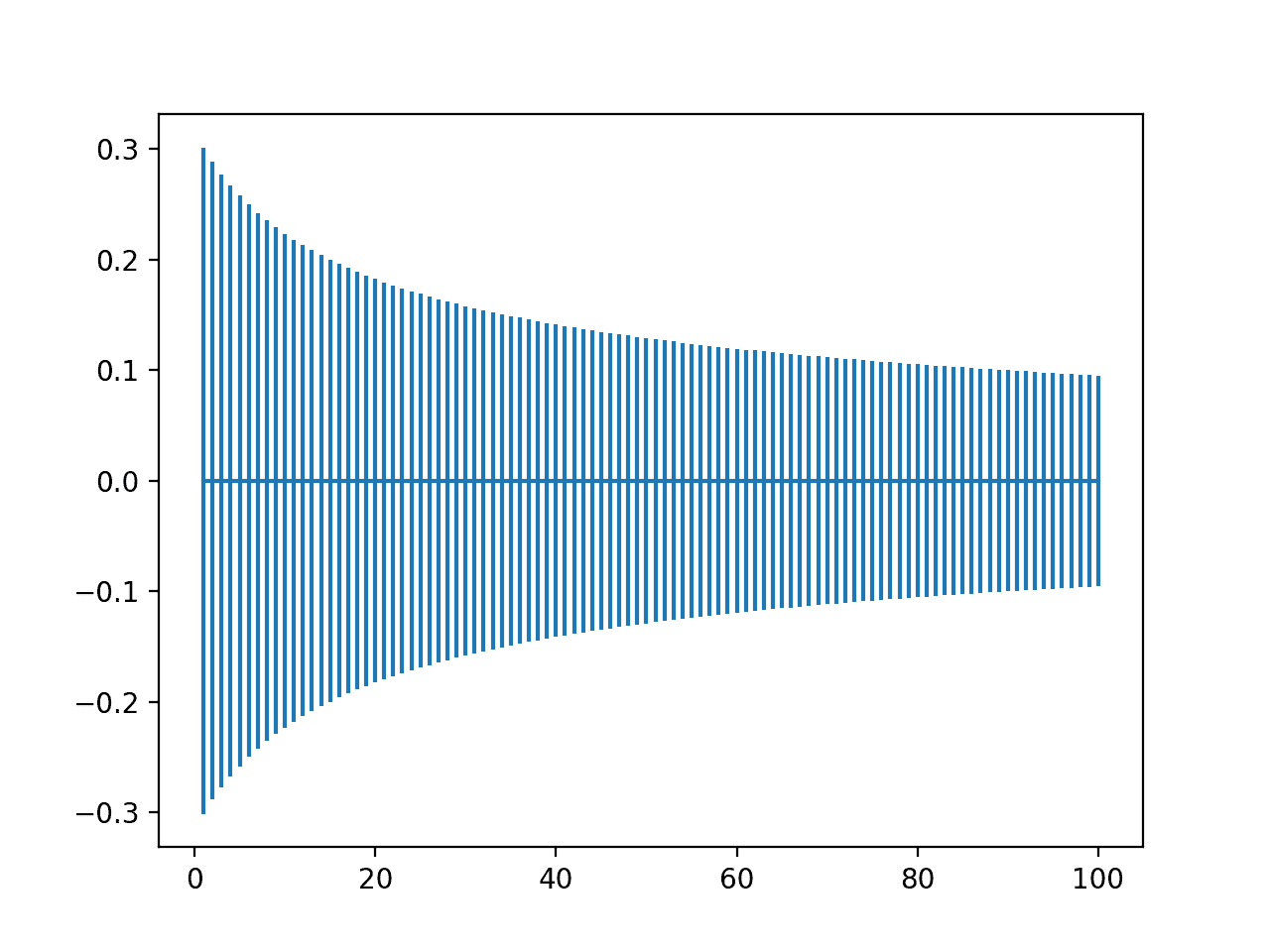

It can also help to see how the spread of the weights changes with the number of inputs.

For this, we can calculate the bounds on the weight initialization with different numbers of inputs from 1 to 100 and a fixed number of 10 outputs and plot the result.

The complete example is listed below.

# plot of the bounds of normalized xavier weight initialization for different numbers of inputs from math import sqrt from matplotlib import pyplot # define the number of inputs from 1 to 100 values = [i for i in range(1, 101)] # define the number of outputs m = 10 # calculate the range for each number of inputs results = [1.0 / sqrt(n + m) for n in values] # create an error bar plot centered on 0 for each number of inputs pyplot.errorbar(values, [0.0 for _ in values], yerr=results) pyplot.show()

Running the example creates a plot that allows us to compare the range of weights with different numbers of input values.

We can see that the range starts wide at about -0.3 to 0.3 with few inputs and reduces to about -0.1 to 0.1 as the number of inputs increases.

Compared to the non-normalized version in the previous section, the range is initially smaller, although transitions to the compact range at a similar rate.

Plot of Range of Normalized Xavier Weight Initialization With Inputs From One to One Hundred

Weight Initialization for ReLU

The “xavier” weight initialization was found to have problems when used to initialize networks that use the rectified linear (ReLU) activation function.

As such, a modified version of the approach was developed specifically for nodes and layers that use ReLU activation, popular in the hidden layers of most multilayer Perceptron and convolutional neural network models.

The current standard approach for initialization of the weights of neural network layers and nodes that use the rectified linear (ReLU) activation function is called “he” initialization.

It is named for Kaiming He, currently a research scientist at Facebook, and was described in the 2015 paper by Kaiming He, et al. titled “Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification.”

He Weight Initialization

The he initialization method is calculated as a random number with a Gaussian probability distribution (G) with a mean of 0.0 and a standard deviation of sqrt(2/n), where n is the number of inputs to the node.

- weight = G (0.0, sqrt(2/n))

We can implement this directly in Python.

The example below assumes 10 inputs to a node, then calculates the standard deviation of the Gaussian distribution and calculates 1,000 initial weight values that could be used for the nodes in a layer or a network that uses the ReLU activation function.

After calculating the weights, the calculated standard deviation is printed as are the min, max, mean, and standard deviation of the generated weights.

The complete example is listed below.

# example of the he weight initialization from math import sqrt from numpy.random import randn # number of nodes in the previous layer n = 10 # calculate the range for the weights std = sqrt(2.0 / n) # generate random numbers numbers = randn(1000) # scale to the desired range scaled = numbers * std # summarize print(std) print(scaled.min(), scaled.max()) print(scaled.mean(), scaled.std())

Running the example generates the weights and prints the summary statistics.

We can see that the bound of the calculated standard deviation of the weights is about 0.447. This standard deviation would become larger with fewer inputs and smaller with more inputs.

We can see that the range of the weights is about -1.573 to 1.433 which is close to the theoretical range of about -1.788 and 1.788, which is four times the standard deviation, capturing 99.7% of observations in the Gaussian distribution. We can also see that the mean and standard deviation of the generated weights are close to the prescribed 0.0 and 0.447 respectively.

0.4472135954999579 -1.5736761136523203 1.433348584081719 -0.00023406487278826836 0.4522609460629265

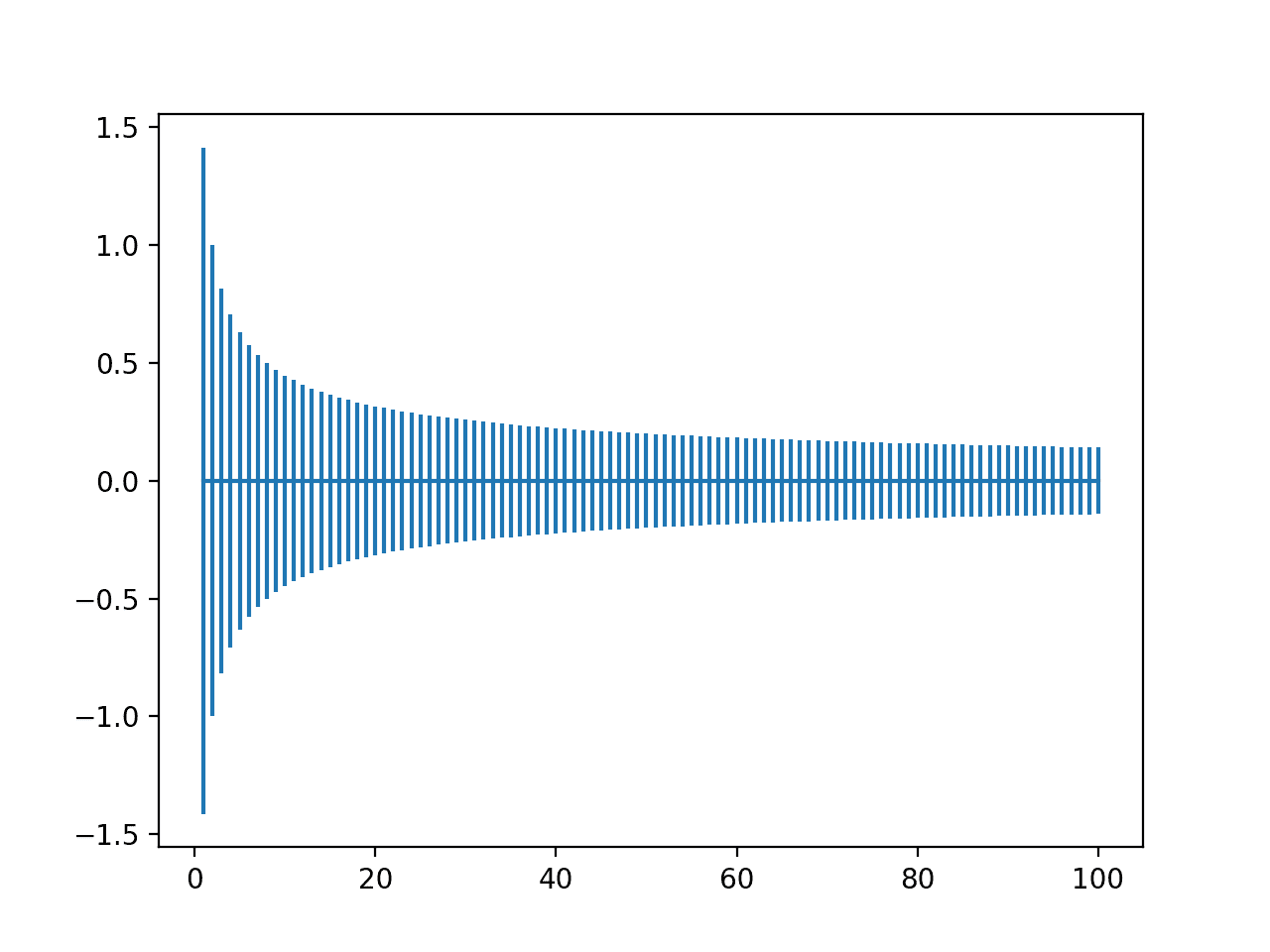

It can also help to see how the spread of the weights changes with the number of inputs.

For this, we can calculate the bounds on the weight initialization with different numbers of inputs from 1 to 100 and plot the result.

The complete example is listed below.

# plot of the bounds on he weight initialization for different numbers of inputs from math import sqrt from matplotlib import pyplot # define the number of inputs from 1 to 100 values = [i for i in range(1, 101)] # calculate the range for each number of inputs results = [sqrt(2.0 / n) for n in values] # create an error bar plot centered on 0 for each number of inputs pyplot.errorbar(values, [0.0 for _ in values], yerr=results) pyplot.show()

Running the example creates a plot that allows us to compare the range of weights with different numbers of input values.

We can see that with very few inputs, the range is large, near -1.5 and 1.5 or -1.0 to -1.0. We can then see that our range rapidly drops to about 20 weights to near -0.1 and 0.1, where it remains reasonably constant.

Plot of Range of He Weight Initialization With Inputs From One to One Hundred

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

- Why Initialize a Neural Network with Random Weights?

- A Gentle Introduction to the Rectified Linear Unit (ReLU)

Papers

- Understanding The Difficulty Of Training Deep Feedforward Neural Networks, 2010.

- Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, 2015.

Books

- Deep Learning, 2016.

Summary

In this tutorial, you discovered how to implement weight initialization techniques for deep learning neural networks.

Specifically, you learned:

- Weight initialization is used to define the initial values for the parameters in neural network models prior to training the models on a dataset.

- How to implement the xavier and normalized xavier weight initialization heuristics used for nodes that use the Sigmoid or Tanh activation functions.

- How to implement the he weight initialization heuristic used for nodes that use the ReLU activation function.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

The post Weight Initialization for Deep Learning Neural Networks appeared first on Machine Learning Mastery.