Author:

Like us, whales sing. But unlike us, their songs can travel hundreds of miles underwater. Those songs potentially help them find a partner, communicate and migrate around the world. But what if we could use these songs and machine learning to better protect them?

Despite decades of being protected against whaling, 15 species of whales are still listed under the Endangered Species Act. Even species that are successfully recovering—such as humpback whales—suffer from threats like entanglement in fishing gear and collisions with vessels, which are among the leading causes of non-natural deaths for whales.

To better protect those animals, the first step is to know where they are and when, so that we can mitigate the risks they face—whether that’s putting the right marine protected areas in place or giving warnings to vessels. Since most whales and dolphins spend very little time at the surface of the water, visually finding and counting them is very difficult. This is why NOAA’s Pacific Islands Fisheries Science Center, responsible for monitoring populations of whales and other marine mammals in U.S. Pacific waters, relies instead on listening using underwater audio recorders.

NOAA has been using High-frequency Acoustic Recording Packages (HARPs) to record underwater audio at 12 different sites in the Pacific Ocean, some starting as early as 2005. They have accumulated over 170,000 hours of underwater audio recordings. It would take over 19 years for someone to listen to all of it, working 24 hours a day!

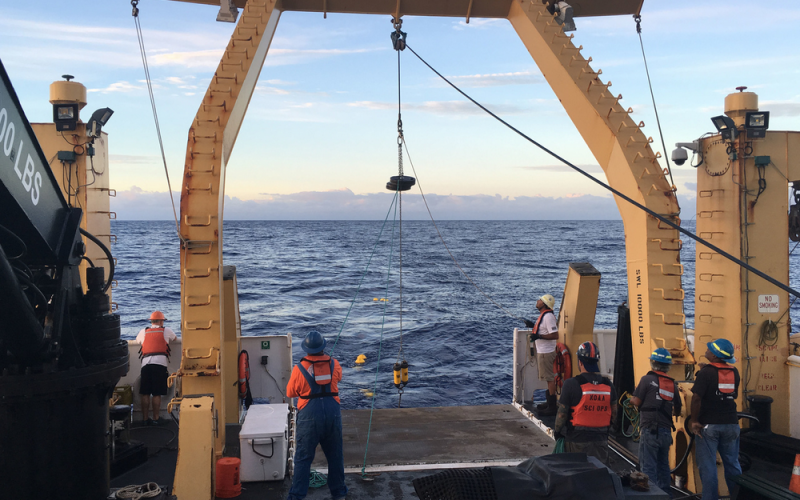

Crew members deploy a high-frequency acoustic recording package (HARP) to detect cetacean sounds underwater (Photo credit: NOAA Fisheries).

To help tackle this problem, we teamed up with NOAA to train a deep neural network that automatically identifies which whale species are calling in these very long underwater recordings, starting with humpback whales. The effort fits into our AI for Social Good program, applying the latest in machine learning to the world’s biggest social, humanitarian and environmental challenges.

The problem of picking out humpback whale songs underwater is particularly difficult to solve for several reasons. Underwater noise conditions can vary: for example, the presence of rain or boat noises can confuse a machine learning model. The distance between a recorder and the whales can cause the calls to be very faint. Finally, humpback whale calls are particularly difficult to classify because they are not stereotyped like blue or fin whale calls—instead, humpbacks produce complex songs and a variety of vocalizations that change over time.

We decided to leverage Google’s existing work on large-scale sound classification and train a humpback whale classifier on NOAA’s partially annotated underwater data set. We started by turning the underwater audio data into a visual representation of the sound called a spectrogram, and then showed our algorithm many example spectrograms that were labeled with the correct species name. The more examples we can show it, the better our algorithm gets at automatically identifying those sounds. For a deeper dive (ahem) into the techniques we used, check out our Google AI blog post.

Now that we can find and identify humpback whales in recordings, it allows us to understand where they are and where they are going—as shown by the animation below.

Since 2005, NOAA’s Pacific Islands Fisheries Science Center has deployed, recovered and collected recordings from hydrophones moored on the ocean bottom at 12 sites. On this map, you can see the spots where more whales were found by our classifier in orange and yellow.

In the future, we plan to use our classifier to help NOAA better understand humpback whales by identifying changes in breeding location or migration paths, changes in relative abundance (which can be related to human activity), changes in song over the years and differences in song between populations. This could also help directly protect whales by advising vessels to modify their routes when a lot of whales are present in a certain area. Such work is already being done for right whales, which are easier to monitor because of their relatively simple sounds.

The ocean is big and humpback whales are not the only ones to make noise, so we also started training our classifier on more species sounds (like the southern resident killer whale, which is critically endangered). We can’t see the species that live underwater, but we can hear a lot of them. With the help of machine learning, we hope that one day we can detect and classify a lot of these species sounds, giving biologists around the world the information needed to better understand and protect them.

A humpback whale breaching at the surface of the water. (Photo credit: Hawaiian Islands Humpback Whale National Marine Sanctuary.)