Author:

Even the simplest human tasks are unbelievably complex. The way we perceive and interact with the world requires a lifetime of accumulated experience and context. For example, if a person tells you, “I am running out of time,” you don’t immediately worry they are jogging on a street where the space-time continuum ceases to exist. You understand that they’re probably coming up against a deadline. And if they hurriedly walk toward a closed door, you don’t brace for a collision, because you trust this person can open the door, whether by turning a knob or pulling a handle.

A robot doesn’t innately have that understanding. And that’s the inherent challenge of programming helpful robots that can interact with humans. We know it as “Moravec’s paradox” — the idea that in robotics, it’s the easiest things that are the most difficult to program a robot to do. This is because we’ve had all of human evolution to master our basic motor skills, but relatively speaking, humans have only just learned algebra.

In other words, there’s a genius to human beings — from understanding idioms to manipulating our physical environments — where it seems like we just “get it.” The same can’t be said for robots.

Today, robots by and large exist in industrial environments, and are painstakingly coded for narrow tasks. This makes it impossible for them to adapt to the unpredictability of the real world. That’s why Google Research and Everyday Robots are working together to combine the best of language models with robot learning.

Called PaLM-SayCan, this joint research uses PaLM — or Pathways Language Model — in a robot learning model running on an Everyday Robots helper robot. This effort is the first implementation that uses a large-scale language model to plan for a real robot. It not only makes it possible for people to communicate with helper robots via text or speech, but also improves the robot’s overall performance and ability to execute more complex and abstract tasks by tapping into the world knowledge encoded in the language model.

Using language to improve robots

PaLM-SayCan enables the robot to understand the way we communicate, facilitating more natural interaction. Language is a reflection of the human mind’s ability to assemble tasks, put them in context and even reason through problems. Language models also contain enormous amounts of information about the world, and it turns out that can be pretty helpful to the robot. PaLM can help the robotic system process more complex, open-ended prompts and respond to them in ways that are reasonable and sensible.

PaLM-SayCan shows that a robot’s performance can be improved simply by enhancing the underlying language model. When the system was integrated with PaLM, compared to a less powerful baseline model, we saw a 14% improvement in the planning success rate, or the ability to map a viable approach to a task. We also saw a 13% improvement on the execution success rate, or ability to successfully carry out a task. This is half the number of planning mistakes made by the baseline method. The biggest improvement, at 26%, is in planning long horizon tasks, or those in which eight or more steps are involved. Here’s an example: “I left out a soda, an apple and water. Can you throw them away and then bring me a sponge to wipe the table?” Pretty demanding, if you ask me.

Making sense of the world through language

With PaLM, we’re seeing new capabilities emerge in the language domain such as reasoning via chain of thought prompting. This allows us to see and improve how the model interprets the task. For example, if you show the model a handful of examples with the thought process behind how to respond to a query, it learns to reason through those prompts. This is similar to how we learn by showing our work on our algebra homework.

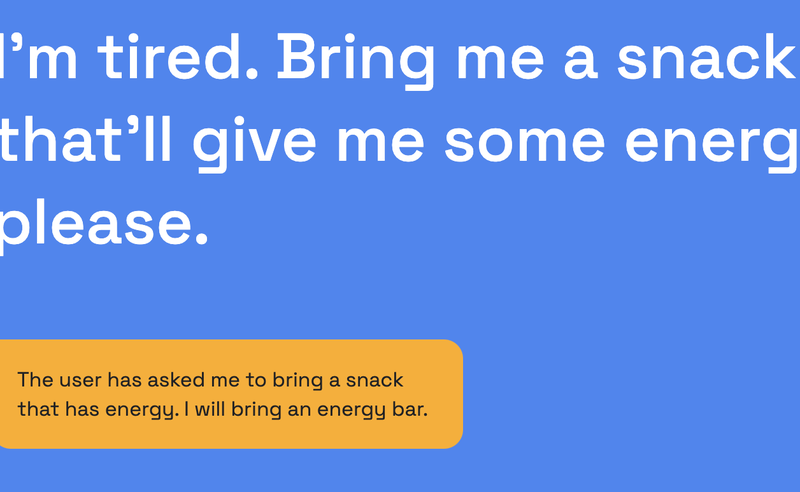

So if you ask PaLM-SayCan, “Bring me a snack and something to wash it down with,” it uses chain of thought prompting to recognize that a bag of chips may be a good snack, and that “wash it down” means bring a drink. Then PaLM-SayCan can respond with a series of steps to accomplish this. While we’re early in our research, this is promising for a future where robots can handle complex requests.

Grounding language through experience

Complexity exists in both language and the environments around us. That’s why grounding artificial intelligence in the real world is a critical part of what we do in Google Research. A language model may suggest something that appears reasonable and helpful, but may not be safe or realistic in a given setting. Robots, on the other hand, have been trained to know what is possible given the environment. By fusing language and robotic knowledge, we’re able to improve the overall performance of a robotic system.

Here’s how this works in PaLM-SayCan: PaLM suggests possible approaches to the task based on language understanding, and the robot models do the same based on the feasible skill set. The combined system then cross-references the two to help identify more helpful and achievable approaches for the robot.

For example, if you ask the language model, “I spilled my drink, can you help?,” it may suggest you try using a vacuum. This seems like a perfectly reasonable way to clean up a mess, but generally, it’s probably not a good idea to use a vacuum on a liquid spill. And if the robot can’t pick up a vacuum or operate it, it’s not a particularly helpful way to approach the task. Together, the two may instead be able to realize “bring a sponge” is both possible and more helpful.

Experimenting responsibly

We take a responsible approach to this research and follow Google’s AI’s Principles in the development of our robots. Safety is our number-one priority and especially important for a learning robot: It may act clumsily while exploring, but it should always be safe. We follow all the tried and true principles of robot safety, including risk assessments, physical controls, safety protocols and emergency stops. We also always implement multiple levels of safety such as force limitations and algorithmic protections to mitigate risky scenarios. PaLM-SayCan is constrained to commands that are safe for a robot to perform and was also developed to be highly interpretable, so we can clearly examine and learn from every decision the system makes.

Making sense of our worlds

Whether it’s moving about busy offices — or understanding common sayings — we still have many mechanical and intelligence challenges to solve in robotics. So, for now, these robots are just getting better at grabbing snacks for Googlers in our micro-kitchens.

But as we continue to uncover ways for robots to interact with our ever-changing world, we’ve found that language and robotics show enormous potential for the helpful, human-centered robots of tomorrow.